- #WEB SCRAPING USING JAVASCRIPT HOW TO#

- #WEB SCRAPING USING JAVASCRIPT INSTALL#

- #WEB SCRAPING USING JAVASCRIPT DRIVERS#

- #WEB SCRAPING USING JAVASCRIPT CODE#

It also supports the option to pass custom web drivers using the argument executable_path.

#WEB SCRAPING USING JAVASCRIPT CODE#

The userscript will automatically execute the browser code whenever we visit the site. But in this example, we're working with a long-running scrape on a site we'd like to close and come back to, so a userscript manager like Tampermonkey is the best tool for the job. The quickest way to get the code into the browser is copying and pasting. Often, intercepting API responses is an easier way to get the data than diving into the DOM, but in this case, it's straightforward to select each of the item elements, then retrieve the item's unique id and array of tag text: var data =

#WEB SCRAPING USING JAVASCRIPT HOW TO#

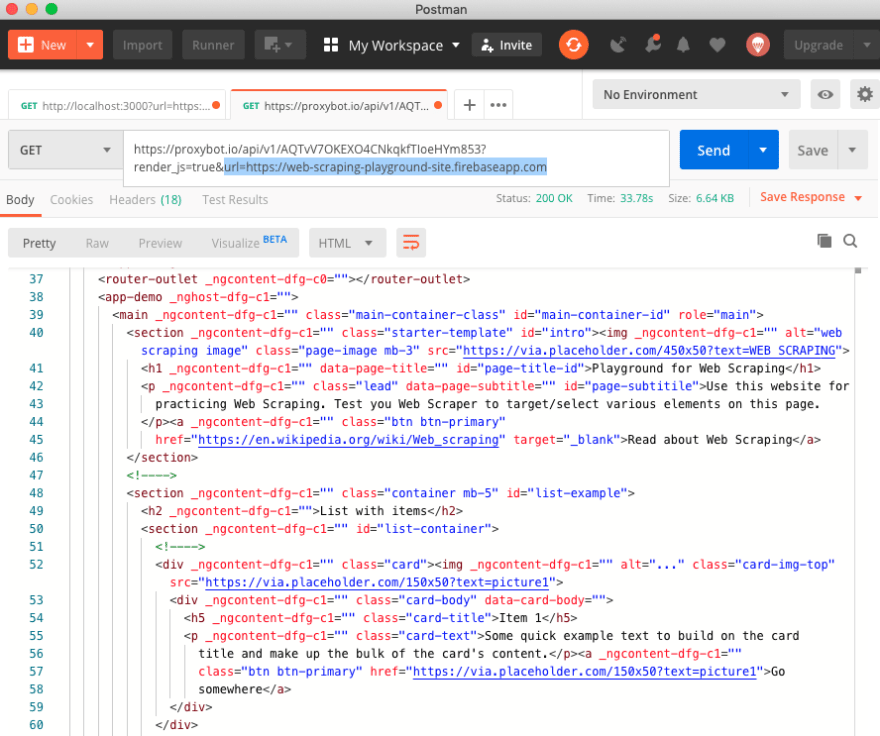

The first step is to use the developer tools to figure out how to access the data. This allows me to determine which technologies are in-demand and compare their relative popularities. The scrape should capture a good sampling of the tags that appear on the site over a few weeks or months. Feel free to run this code and peek at the element inspector to get a sense of the app. In a real site, items might be job or real estate listings, things for sale, new releases, (micro) blog posts or chat messages.Įach item has a list of tags representing popular technologies.Ĭonst rand = n => Math.floor(Math.random() * n) Ĭonst feed = document.querySelector("#feed") įrom a scraping perspective, the mock website's code isn't as important to understand as the site's behavior and structure. Every so often, a new item shows up in the feed. The page we're scraping is a simplified single-page app feed. I developed on Windows 10, but the code should work fine on Linux and macOS. Firefox and a Python back-end will work just as well, and all of the tools other than the browser are optional and use-case dependent. There's nothing particularly special about my stack. Node.js v17.4.0 (server-side runtime environment).Tampermonkey 4.16.1 (browser extension for userscript automation).While I won't use any libraries, I'll still use a few pieces of software: In the post, I assume you're familiar with JavaScript, CSS selectors, DOM fundamentals and HTTP. Although this approach isn't a drop-in replacement for browser automation, it offers a nice alternative for your web scraping toolkit. This post shares a strategy for quickly scraping dynamic pages in real time without libraries, using the browser console and a simple local back-end.

#WEB SCRAPING USING JAVASCRIPT INSTALL#

Browser automation can be heavy on memory and CPU, take time to install and learn, and can run into detection issues, login screens and other annoyances standing between you and your goal. Those tools are fantastic, but often unnecessary. Next, you'd usually reach for a browser automation library such as Selenium, Puppeteer or Playwright. A quick look at the site's static HTML shows a JavaScript-driven single-page application. Let's suppose there's a website you'd like to scrape data from, monitor, or otherwise manipulate.

0 kommentar(er)

0 kommentar(er)